|

To realize the dynamic situation understanding, object tracking is one of the most important and fundamental technologies. This is because most of dynamic situations in the scene can be characterized by object motions. Therefore, we should focus on such moving objects and obtain the information of the focused objects to understand the dynamic situation. To apply object tracking to real-world systems, the object tracking method has to cope with complicated situations and conduct processing in real time.

In this thesis, we propose a real-time flexible object tracking system. Our objective is to realize the tracking system that can adaptively change its behavior depending on the situation and task, and persistently keep tracking focused target objects.

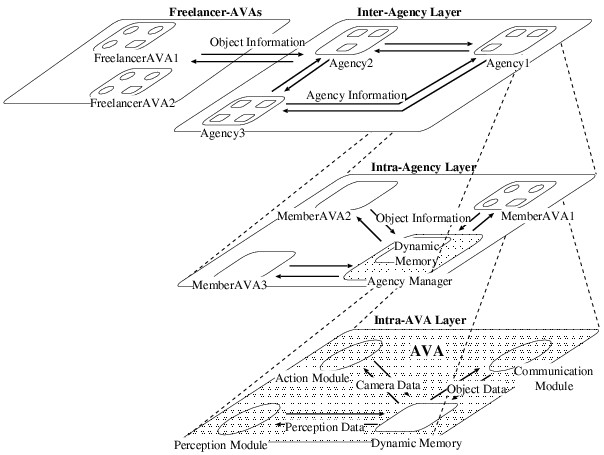

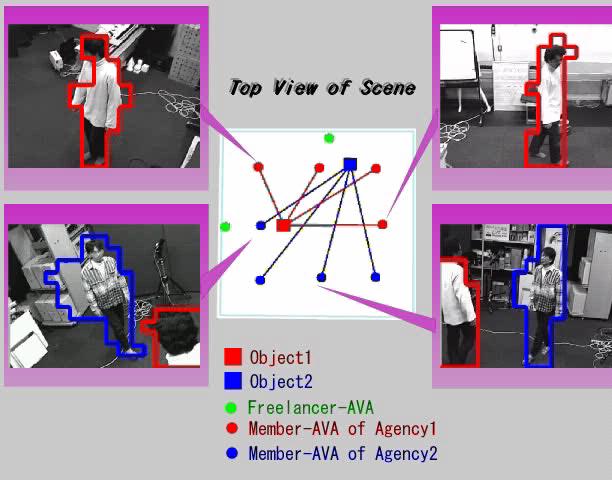

In order to realize real-time multi-target tracking in a wide-spread area, we employ the idea of Cooperative Distributed Vision (CDV, in short). The CDV system consists of communicating Active Vision Agents (AVAs, in short), where an AVA is a logical model of an Observation Station (real-time image processor with active camera(s)). For real-time object tracking by multiple AVAs, we have to solve (1) how to design an active camera for dynamic object detection and tracking, (2) how to realize real-time object tracking with an active camera and (3) how to realize cooperation among AVAs for real-time multi-target object tracking.

First of all, for wide-area active imaging, we developed a Fixed-Viewpoint Pan-Tilt-Zoom (FV-PTZ, in short) camera. This camera is designed so that the projection center is always placed at the rotational center irrespectively of pan, tilt and zoom controls. This property allows the system (1) to generate a wide panoramic image by mosaicing multiple images observed by changing pan-tilt-zoom parameters and (2) to synthesize an image taken with any pan-tilt-zoom parameters from the wide panoramic image. With the FV-PTZ camera, we can realize an active camera system that detects anomalous regions in the observed image by comparing it with the generated background image (background subtraction method).

|

|

Next, for real-time object detection and tracking, we designed an Active Background Subtraction method with the FV-PTZ camera. To successfully gaze at the target during tracking, the system incorporates a flexible control system named the Dynamic Memory Architecture , where multiple parallel processes share what we call the Dynamic Memory , to dynamically integrate visual perception and camera action modules. The dynamic memory enables parallel modules to asynchronously obtain the information of another process without disturbing their own intrinsic dynamics (Experimental result [Movie file] ).

|

|

Finally, to implement the real-time cooperation among AVAs, we designed a three-layered interaction architecture:

|

|