Continuous Finger Gesture Spotting and Recognition based on Similarities between Start and End Frames

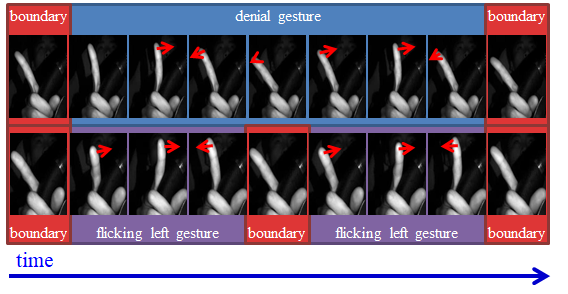

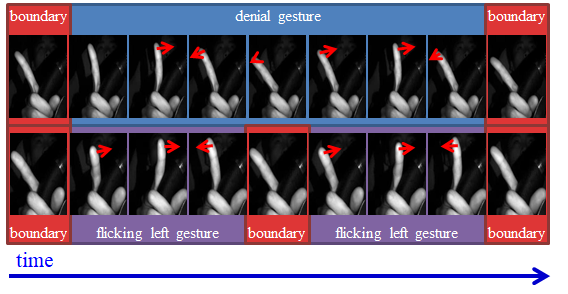

Touchless in-car 1 devices controlled by single and continuous finger gestures can provide comfort and safety on driving while manipulating secondary devices. Recognition of finger gestures is a challenging task due to (i) similarities between gesture and non-gesture frames, and (ii) the difficulty in identifying the temporal boundaries of continuous gestures. In addition, (iii) the intraclass variability of gestures' duration is a critical issue for recognizing finger gestures intended to control in-car devices. To address difficulties (i) and (ii), we propose a gesture spotting method where continuous gestures are segmented by detecting boundary frames and evaluating hand similarities between the start and end boundaries of each gesture. Subsequently, we introduce a gesture recognition based on a temporal normalization of features extracted from the set of spotted frames, which overcomes difficulty (iii). This normalization enables the representation of any gesture with the same limited number of features. We ensure real-time performance by proposing an approach based on compact deep neural networks. Moreover, we demonstrate the effectiveness of our proposal with a second approach based on hand-crafted fea21 es performing in real-time, even without GPU requirements. Furthermore, we present a realistic driving setup to capture a dataset of continuous finger gestures, which includes more than 2,800 instances on untrimmed videos covering safety driving requirements. With this dataset, our both approaches can run at 53 fps and 28 fps on GPU and CPU, respectively, around 13 fps faster than previous works, while achieving better performance (at least 5% higher mean tIoU)